This week, guest Kevin Smith, M-Star’s Director of Software Development, joins John to discuss computational fluid dynamics in the cloud.

“We just want our clients running simulations, adding value to their enterprises,

doing good science.”

– Kevin Smith

In this episode, John and Kevin discuss:

- The history and emergence of cloud-based computing for CFD

- The sticking points of using GPU instances in the cloud

- How to get up and running with cloud-based GPU resources and CFD software – fast and frictionless

Question about deploying GPU resources and CFD in the cloud? Contact us to join the conversation.

John Thomas: So we’re talking today with Kevin Smith, the director of software development here at M-Star CFD, about cloud computing – some of the pros and cons as related to computational physics and CFD. Kevin, thanks so much for being here.

Kevin Smith: Yeah, my pleasure.

John Thomas: So, Kevin, a bit of background. Tell me a bit how you arrived where you are today. How did you get familiar with computational physics? How did you become an expert in cloud-based computing? Tell me how you got from, say, high school to today as an expert and again, computational physics on the cloud.

Kevin Smith: Well, I went to college for mechanical engineering, and I guess throughout my college experience, I did a little programing here and there with the classes and stuff. Went on to Penn State to really focus on fluid mechanics, specifically computational fluid dynamics. So I started writing fluid mechanics code during that master’s degree.

After that, I went into the naval architecture field and really started writing software then and applying my fluid mechanics in that job. That job lasted about four years, and then after that I went to the Applied Physics Lab, John Hopkins, and that’s where I really went back to what I was working on in my master’s degree using the openFOAM CFD tool. And that’s an open source CFD software that you can open up the source code, make edits, you can do something that’s a little bit more custom or is not really available out in the commercial tools yet.

Did that job for a while, and then you and I met there, and that’s when we started M-Star. And since then, I’ve been really more focused on the software development aspects of things more so than the fluid mechanics part. So I’m responsible here at M-Star for the graphical user interface, the production deployments of things, you know, sort of the operations of the company.

So yeah, in that I’ve gotten familiar with different cloud technologies – AWS, Azure, Google Compute – just the different architectures that we need to run M-Star on that’s leveraging GPUs, stuff like that.

John Thomas: So just to contextualize this, Kevin, what’s the cloud?

Kevin Smith: [Laugh]The cloud is basically someone else’s data center. It’s just a remote resource that you’re renting or maybe you’re reserving for some period of time. When people think about the cloud, they usually think about their data being there. So you might think about like a Dropbox or like OneDrive is like “the cloud” in that context.

In other contexts, in the context of M Star, we usually think about the cloud as like Amazon Web Services, Google Compute. In those environments, you can spin up resources as needed, spin them down, and you’re really just renting someone else’s computer and using it for some period of time.

John Thomas: Got it. So beyond just storing data and pictures and files and whatnot, you can grab machines to run software on and do calculations on.

Kevin Smith: Yep.

John Thomas: When did the cloud come into the consciousness of companies, would you say? Were you running simulations on the cloud in grad school or when did you see this kind of emergence of cloud-based computing for scientific computing and other scientific applications?

Kevin Smith: Well, I wouldn’t say people were doing this as far as I knew back when I was in grad school – that was in ‘09. I think this is more of like in the past five to ten years, people have become more and more and more interested in this for scientific computing because I guess ten years or so ago people were still primarily running on CPUs. And the thing with running on CPUs is you have to have a whole lot of them, you have to have a lot of memory, have to have the interconnects. Standing up those infrastructures on the cloud wasn’t really there as far as I knew. I think there was maybe one or two providers that were doing that, but I think that’s changing. Definitely now.

Now, all the cloud providers offer GPU type instances that you can get a hold of, you know, an instance with four, eight, even 16 GPUs on one computer up to a terabyte of GPU memory. So I mean, I think there’s definitely a shift happening over the past five years towards cloud computing for science applications.

John Thomas: And so what’s driving that? Is it just because companies want to be more efficient with their capital expenditures because sometimes project scale changes? Why are companies moving towards cloud based HPC?

Kevin Smith: I think everybody comes up with their own excuse. I mean, costs to acquire new hardware is definitely, I think, a consideration there because, I mean, just one of these V100 GPUs is maybe $10,000. And if you’re going to put eight of those in a specialized piece of computational hardware, I mean, the price goes up. So if it’s just a small team of like, you know, five to ten engineers in a group that need a resource to run this new CFD tool, I think it might be difficult for them to also buy that resource. Then you have to bring it in house and then manage it, because you need to put a man on to run and maintain that hardware. So I think one of the reasons that people are going to the cloud is you can just spin these things up. It’s just the cost of spinning up is much lower. So I think that’s definitely one major reason people are going on the cloud.

John Thomas: That makes sense. And so to that point, it sounds frictionless. It sounds like you say, you spin up. We just kind of boot up a machine. Are there sticking points? Are there difficulties in just logging in to AWS or GCP or whatnot, booting up an eight GPU instance or four GPU instance, and just running away with your simulations? Are there any sticking points that come up along the way?

Kevin Smith: Oh yeah, definitely. One sticking point that we’re seeing, and definitely recently, just the scarcity of these GPU instances. Sometimes you can’t bring them up because there’s so many people trying to obtain the resource, so even on AWS or even Google sometimes you might not be able to bring it up when you need it.

John Thomas: Interesting.

Kevin Smith: And so the ability to flip the switch on and off, depending upon the availability of that particular resource, it may or may not come up. That’s definitely a consideration for some people. Some people just turn them on and leave them on, so they don’t have to worry about that.

But I think the other major sticking point would be just trying to adhere to your enterprise IT security policies because being a cloud resource, the security implementation is just inherently more complex, and it’s not behind the, you know, the so-called firewall of the enterprise now. So there’s going to be a VPN connection. There may be additional data security considerations, you know, specific to an enterprise. So there could be like an IT security policy that could make a, you know, just turning on the switch harder.

You know, a smaller company without those restrictions might just be able to turn it on and get going. But larger companies may require more security implementation so that that might be a sticking point.

John Thomas: That that makes sense. When I Google cloud-based providers and whatnot, I see some bare metal providers. These would be like AWS, as I might call them, or GCP or Azure, and some other companies that kind of bundle the cloud up with some front-end software that makes it easier to kind of access software that you might be using and spin up instances. Some of these companies are companies like Nimbix, Rescale, et cetera. You know, what are the pros and cons of going straight to bare metal, like AWS, and using something of an intermediary who has some of the software hosted already? Any thoughts on those two kinds of bifurcations in the space?

Kevin Smith: Well, it depends on your needs. If your workflow fits into the paradigm that’s implemented by somebody like Rescale or Nimbix, I think those platforms are great. I guess what degrees of freedom do you really need for your implementation? If you don’t really care and you just want to start up something, maybe one of those cloud providers would work well. If you have a little bit more knowledge and you’re able to just sort of turn these things on and off yourself – I mean, AWS and Google can also be very easy to use, but you know, that depends upon your level of control over spinning up and down resources. There may be a security policy in place at your organization that prevents you from turning on a new computer that didn’t previously exist.

John Thomas: Right.

Kevin Smith: So there could be, depending upon sort of the policies of your environment and the flexibility that you need, you may want to pick one or the other. So, yeah, that’s really the difference.

John Thomas: That makes sense.

Kevin Smith: So we’ve talked about the cloud. One other thing that we’re seeing lately is more and more interest in container technology. And if you’re not familiar with containers, it’s basically kind of like with the cloud, you can just sort of turn on a computer and you don’t really need to know much about turning on computers. You just say, I want a computer with four GPUs on it and you turn it on. Yeah, you didn’t need to know anything about NVLink technology, NVSwitch technology, how to get these things connected together. You didn’t really need to know much of anything about turning on the computer.

Similarly, with containers, you can just sort of turn on M-Star without really needing to know how to install M-Star. So it’s sort of like a parallel in terms of deployments.

Containers are really just an easy way to start up and run M-Star. We’ve been working with NVIDIA recently to get our application published in their NVIDIA Container Catalog. So that should be coming out pretty soon here.

I think that’s going to help people deploy these things in either an enterprise environment, if it’s an in-house resource, you can use containers, you can use containers on the cloud. So it just makes the whole process easier. If you can just spin up a new computer and then instantly run the program, it’s just that much easier.

John Thomas: Yeah, that makes a lot of sense. It can be easy to set up the hardware, but if the software is still a sticking point and getting it installed, well then you haven’t made much progress. It seems like both these problems have to be solved – efficient ways to get good HPC computer resources on the cloud, then efficient ways to get your software up and running on those resources. And so I see how the container and the cloud-based computing environment kind of go hand in hand and maximizes efficiency of users trying to get up and running in a cloud-basedenvironment.

Kevin Smith: Yeah.

John Thomas: So is M-Star available for download now from the NVIDIA Container Catalog?

Kevin Smith: Well, I clicked the button today.

John Thomas: [Laughs]

Kevin Smith: I don’t think it’s publicly available yet, but we are in their system and it should be getting published soon so people will just be able to run M-Star. You know, obviously if they have a license, they should be able to run it on any machine that supports Docker.

John Thomas: Awesome. That’ll make it a little more frictionless even.

Kevin Smith: Yeah, I mean, we want our people, we want our clients focusing on running simulations. That’s sort of like our philosophy on computational fluid dynamics. We don’t want people worrying about, you know, what turbulence model that they need to use.We just want them modeling. So, yeah, I think we’re just trying to take that philosophy elsewhere into how people interact with the tool, whether that be how they spin up a machine or how they’re installing and running the code.We want the process to be easy and accessible so people can just focus on their engineering rather than the ins and outs of technology.

John Thomas: Yeah, that makes a lot of sense. OK, so where could people go to learn more? Are there some good resources that you go to learn more about deploying in the cloud or trying to maximize cloud-based technologies? Are there some resources you’d like to share?

Kevin Smith: Sure. I would highly suggest our own documentation for this.

John Thomas: [Laughs]

Kevin Smith: So just because the documentation for GPU Plus Cloud is sort of limited, so we’ve compiled some good documentation there about how to just run on AWS. Like that’s one of the documents and instructions on how to just run on Google Compute. And just how to run our tool.

These instructions are pretty easy. I mean, I guess it depends upon again, the security restrictions for your enterprise, that can quickly increase complexity of an instance rollout, but fundamentally, our documentation, AWS and Google’s documentation are also very good. They’re very exhaustive in how to manage and run these things.

John Thomas: Yeah, we’ll include links to the documentation in the podcast description. OK, Kevin, I understand, so I can pretty easily spin up a pretty nice HPC resource. I started up. I have a cursor blinking at me. Then what? How do I get my software installed? How do I actually start using the cloud to run my software and ultimately get the answers I need?

Kevin Smith: You can install the software manually, or you can leverage what’s called container technology or Docker. There are different names for it. And that lets you, you know, almost immediately bring in the application and start running it without being concerned about the installation particulars or anything like that. So that’s I definitely would recommend looking into how to run with containers if you’re looking to make your startup easier.

John Thomas: So it sounds to me like I can boot up this multi-GPU cloud-based resource. I can grab a container and I can get up and running without having to worry about the switching fabric, without having to worry about whether I have a CUDA or MPI installation or whatnot, I can just grab the hardware, grab the container and go.

Kevin Smith: Yep, yep, that’s the idea.

John Thomas: That’s awesome. That’s exactly how it should be. And kind of where we want to be, we don’t want our users thinking about the nuts and bolts of how MPI was compiled and what’s going on within the GPU.

We want them thinking about what the predictions they’re getting are telling them and how they can use this to make an informed engineering decision. So I think that goes hand in glove with kind of where we want to be as a firm.

Kevin Smith: Yeah, that’s yeah, that’s exactly right.

John Thomas: So how does the existence of the cloud inform M-Star development? Is this something that is something of an afterthought where you have the product and you see if it might shove in the cloud? Is this informing your daily development decisions? How much does the presence of the cloud and the participation of users on the cloud inform your daily development decisions and deployment decisions with respect to M-Star CFD?

Kevin Smith: It’s definitely not an afterthought. I use the cloud to do a lot of my own testing, so I mean, sort of by definition that the cloud is integrated in our development process.

John Thomas: That’s cool.

Kevin Smith: We have fresh documents on how to quickly install M-Star from scratch, you know, just using a couple of lines of code. It’s definitely part of, I think, our development practice. We see it as it’s going to be here a long time, if not take over, you know, largely how people approach this problem. So yes, and I think it’s definitely part of how M-Star works.

We can support remote visualization of both the pre-processor and the post-processing. So we’ve written documentation on how to set those sort of architectures up on AWS. I feel like we’ve sort of seen a lot with respect to the cloud and deployments at this point that there’s less and less that’s surprising. And I definitely think that star is very cloud friendly, cloud ready.

John Thomas: So, OK, I got my hardware, I got my software installed, then I might have a pesky licensing question and I hear there’s some clients. Getting your software license on the cloud can be a pain that is getting it connected to your licensed software. Vendors often have funny constraints related to license usage on the cloud. How does M-Star approach this? How does M-Star solve this problem?

Kevin Smith: We use what’s called the Reprise License Manager. This is a license management technology that lets you use what’s called a floating license. This floating license is obtained from our floating license server. So in the context of like the cloud, you could set up an M-Star license server. We could host it for you. You could put your license server on the cloud as well, and it’s just a little configuration. You can configure the license server and you should be good to go.

The way our licensing works is that each simulation consumes one M-Star license. You don’t count number of cores or anything like that. We just count the number of the simulations. And so at this point, it’s pretty painless and we can definitely make license usage very easy.

John Thomas: I mean, that’s nice because you hear these stories about how someone wants to run on a certain number of CPUs. They’re paying for additional packs. If people want a GPU, they’re paying for additional licenses or whatnot. It sounds like it’s a pretty good strategy here that allows us just to run wherever we want to on however many GPUs you want to without having to pay a toll for every instance of GPU or cloud-based deployment.

Kevin Smith: Yeah, we don’t like our customers dealing with license issues. We like a simple license policy. Again, we just want our clients running simulations, adding value to their enterprises, doing good science.

John Thomas: Awesome. Kevin, this was great. Thanks so much for taking time to join us today.

Kevin Smith: Yeah, my pleasure. Thank you.

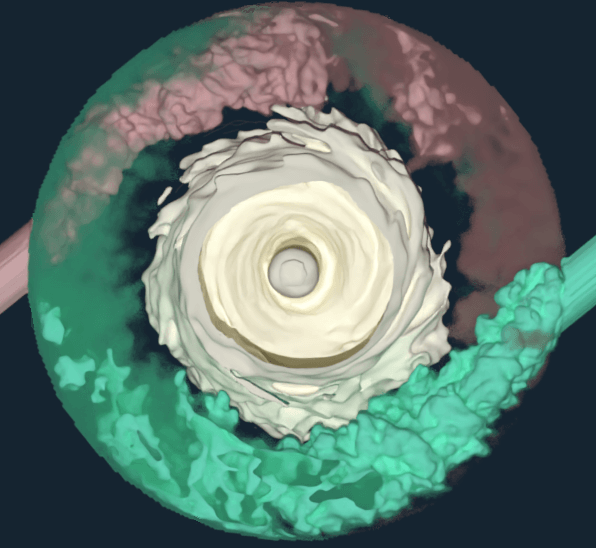

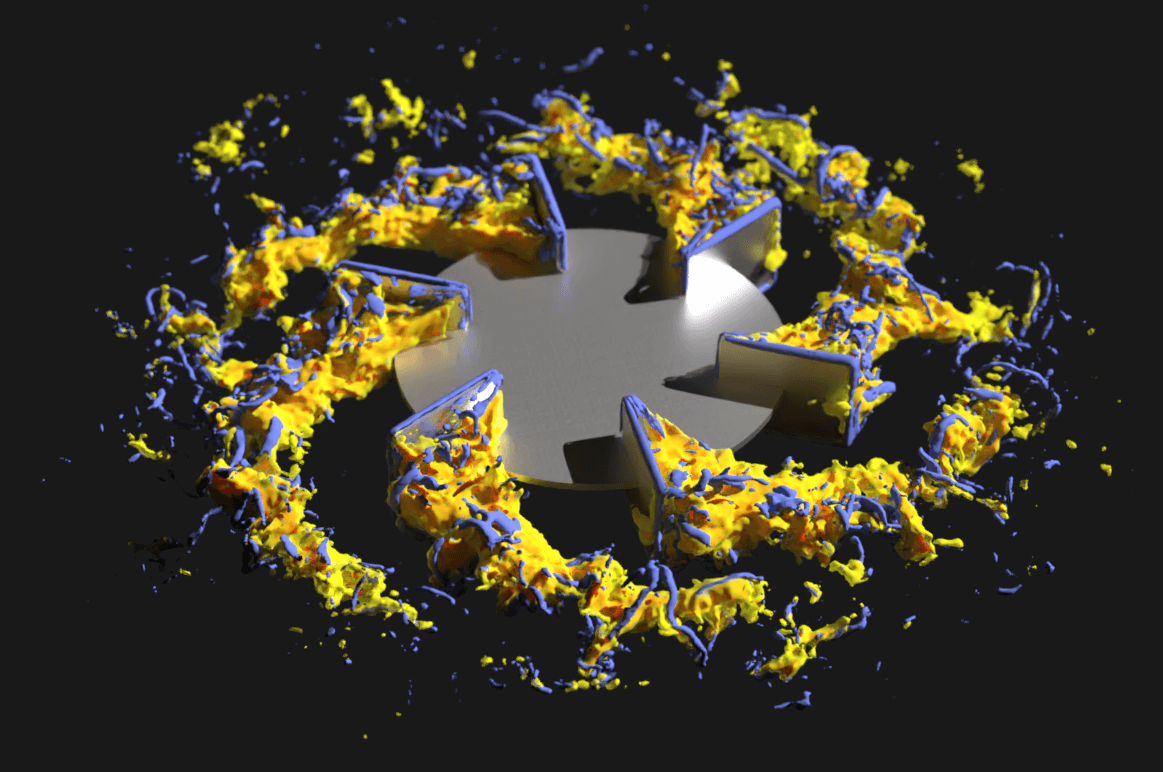

Explore the Scientific R&D Software

Explore the Scientific R&D Software