This week, guest Aaron Sarafinas, Principal of Sarafinas Process & Mixing Consulting LLC, joins John to discuss one loaded question: “Will CFD or some CFD parallels ever replace experiment—and in an increasingly digitized world, what’s the role of experiment?”

“We have the opportunity to learn something when the experiment fails or it doesn’t give us the result we expect, and that’s where we can dig deeper.

And that’s where a tool like CFD can be helpful.”

– Aaron Sarafinas

Aaron, a thought leader in process development and mixing technology, offers the short answer – no – but the longer answer is worth a listen.

In this episode, John and Aaron discuss:

- Real-world examples of how simulation can be used and add value to process development efforts

- The role of experiment in an increasingly digitized world

- How CFD complements experiment (and vice versa)

John Thomas: Hi, everybody. Thanks for joining us today. Today we’re talking with Aaron Sarafinas from Sarafinas Process & Mixing Consulting. We’re grateful to have him here where we’re talking about, “Will CFD or some CFD parallels ever replace experiment: in an increasingly digitized world, what’s the role of experiment?”

Aaron, it’s a loaded question, it’s loaded title. Glad you’re here! Thanks for tuning in.

Aaron Sarafinas: [Laughs] Thanks, John. OK. This is where I should say the short answer is no.

John Thomas: Okay.

Aaron Seraphine: And then we can just be quiet for the next 20 minutes.

John Thomas: Okay. [Laughs] Yeah, I like to set it up that way.

Aaron Sarafinas: But you see, replace is not an appropriate word because really, CFD and experimentation are complementary, not competitive, and we can explore that more.

John Thomas: You’re setting the tone off right. Aaron, tell me, you spent a lot of years in industry. Then you broke off as sort of your own firm. And again, at this point, you’re pretty well-established player I’d say in the process engineering community through your decades of work and your consulting work. Tell me how you got to now. How did you get to be somewhat of a thought leader in this space today?

Aaron Sarafinas: Since June of 2018, I’ve been an independent consultant, and I’ve been working with clients who need to achieve faster process development and easier scale up and who want to leverage advantaged mixing technology. Now, prior to that, I had been working with Rohm and Haas and Dow for almost 36 years.

John Thomas: OK, yeah.

Aaron Sarafinas: So really, I’m a process development person who just happens to specialize in mixing technology.

John Thomas: That’s a good bit. Yeah.

Aaron Sarafinas: And so I’ve been involved in the development, scale up and commercialization and troubleshooting for many products for a wide variety of businesses. And the thing is, the goal in process development isn’t “let’s come up with a pretty model.” It’s “let’s succeed commercially.” The goal of process development is commercial success. So when we talk about using any sort of tool, modeling tool or experimental tool, in process development, we have to be using the right tools, the right way, at the right time to build the process knowledge and achieve commercial success.

John Thomas: Mhhm. Yeah.

Aaron Sarafinas: So the thing of it is, process development is about making choices. Okay? And so we need to take a look at the types of processes that we want to push forward, that we want to go and say, “Yeah, let’s commercialize this” or actually we have a ton of process options, and we have to efficiently chop off the ones that aren’t really winners. And the more efficiently we can do that by building process understanding, well, that’s where the use of all kinds of modeling can be critical.

John Thomas: That’s a very good contextualization, as it were. And I like how you have this kind of big tent philosophy on analysis and simulation. And I think what motivates this is the fact that in a lot of conferences we go to, these fields are kind of like siloed. You’ve got the computational modeling track, right, and down the hall you have the advanced experiment track, as it were. And I think the problem is, you get these groups and they miss this broader picture of: We have a common goal here and a shared enemy.

Our common goal is better processes and our common enemy is inefficiencies, right? And I’ll say complacency. I see the community breaking down in some sense into these groups. Do you see that happening as well? Whether you like it or not, do you see that partitioning?

Aaron Sarafinas: Well, that’s a challenge that we’ve had in chemical engineering for a long, long time. And long about 1999, 2000, AIChE formed the process development division, which is really a way that we can sew together all these different silos into the common thing of process development, because really what we’re trying to do is get to some commercial goal. That’s why they pay us, right, to bring new products on board or to troubleshoot existing products. And what our job is, is to bring the right tools to bear.

John Thomas: So when is CFD the right tool?

Aaron Sarafinas: Well, it’s interesting because CFD, you know, when I first got familiar with CFD in the, I guess it was the late 1980s, when one of my Rohm and Haas colleagues called me up and said she was going to be working with this CFD code, called PHOENICS. And she said, “do you have any problems that you might want us to look at with that?” And so I mean, my mixing work was involved in suspension polymerization, and we’d seen some really cool things about agitation, effects and scale… I started describing that to her, and it’s a really complex multiphase system. And I said, “Yeah, this would be really cool if we can do this, this and this.” And she quite correctly said that, “yeah, the state of the technology in the late seventies is not quite sufficient to be able to solve that problem.” Well, maybe today we’re catching up on that.

John Thomas: Yeah.

Aaron Sarafinas: And so the whole notion of let’s find a needle to hit with this hammer isn’t quite the right way to do it.

John Thomas: That’s fair. That’s a good point.

Aaron Sarafinas: Instead, I started doing CFD sometime in the early 2000s, I guess, and at the time I was working in a group at Rohm and Haas, where we were doing consulting to all the businesses in the corporation. And so one of our businesses had an issue with a low productivity in their production unit. It’s a relatively simple process, but they were having fouling. Now usually my approach would be, “Let’s take some engineering correlations or scale something down and do some experiments.” But really, the complexity of the internals of the system really spoke to the need for taking a CFD sort of approach. So, you know, it was the tool of choice to see if the flows matched their observations. I was using fluent at the time, set up this really complex geometry, ran simulations and reviewed the results with the technical staff. And so when they saw where I had regions of zero flow, they were thrilled because it matched up exactly with where they saw the fouling in the system.

John Thomas: Yeah.

Aaron Sarafinas: So then it was pretty straightforward to say, “Well, I can’t use correlations, I can’t do experiments, let’s do some CFD on modifications to the system to get it to deliver some improved flow in these regions.”

John Thomas: That’s a good story. And you said something that I think is worth pulling on, you said, “where I can’t use correlations.” You said some dyad there, “where I can’t use correlation.”

Aaron Sarafinas: Yes, exactly.

John Thomas: That sounds like that’s the real target here. Nothing’s faster than a correlation.

Aaron Sarafinas: Right.

John Thomas: But sometimes you’re outside their purview and need some deeper physics. Sounds like that’s the place where we need to target our simulation efforts.

Aaron Sarafinas: Yeah, that tended to be the type of problem that I’d be taking a look at. You know, there’s another case where we were looking at an equalization, a wastewater treatment problem, and we deduced that it was this equalization tank, which is nothing more than a big concrete block sort of rectangular caverns that fluid would come into at different times and different concentrations. And the idea is it, the concentrations, smoothed out to go to the downstream waste treatment system.

John Thomas: Sure.

Aaron Sarafinas: Well, the problem was they were getting these surges in concentration that weren’t really making sense. So we decided to take a look using CFD at a dynamic simulation and looked at the orientation of the nozzles they had in the system. Jet mixing is again something that there are good correlations on if you’re in a big storage tank or some other well-defined system. But CFD was necessary for the really complex geometry and different things we could do to fix it. Well it ended up, we identified the problem, made simple modifications that was just something that the maintenance guy comes in and make some adjustments on these different nozzles. And he’s done. A zero-capital solution eliminates the problem. But again, really complex geometry where we needed to characterize the problem and then do some quick screening of solutions where experiments, it would be tough to scale down experimentally.

John Thomas: Right.

Aaron Sarafinas: And the correlations just didn’t fit.

John Thomas: That makes sense. These are a good kind of success stories of how simulation can be used and add value, which is nice. Now, within this kind of theme, of big tent process engineering. What can CFD learn from experiment? I mean, here we come in guns blazing, saying, “Hey, we get to solve physics from first principles. We can use empirical relationships here, grounded in the first principle of turbulence theory.

Aaron Sarafinas: Mm-Hmm.

John Thomas: Where are CFD’s biggest blind spots, I should say, and where are our weaknesses as it were as we try to digitize processes and model them entirely using digital frameworks?

Aaron Sarafinas: We can model some really cool things. OK? There’s no question about it, and it’s just a question of “when do we need to model the right things?” Rohm and Haas, my buddy Dave Kolesar used to talk about modern guided experimentation.

John Thomas: That’s interesting.

Aaron Sarafinas: And because of our size, we needed to be nimble. We didn’t have huge stacks of people to give them a problem, go away and come up with some sort of answer. Now we had to get the right answer quickly, and so we had ways of going and having CFD and experimentation work in a directed and complementary way.

OK, we did that for all modeling too, but I think we would use modeling to screen our process options, but also direct the experiments. And yes, you need experiments to generate the data you may need to go and put parameters into your model. But also, one really powerful thing about a model is you can say I can do the experiments in silico, which I guess is the only way of saying that.

John Thomas: It’s very bulge, yeah.

Aaron Sarafinas: And so basically point us to experimental conditions or equivalent configurations that could lead to a better solution.

John Thomas: I like that.

Aaron Sarafinas: Now that’s model guided experimentation. You build the process understanding to make predictions. You influence the experiments that arrive at a more optimal process condition. And that’s what’s going to lead to commercial success faster.

John Thomas: That makes a ton of sense. This kind of perspective we’re having that, I think, what experiments are really good at, maybe that last mile confirmation, right? Like, you can use the simulations to guide where we want to focus are possibly more expensive and more resource sensitive experiments to get the most return on what might be a harder thing to do. That’s a good narrative there.

Aaron Sarafinas: Exactly right. And certainly there have been many papers, you know, like the last mixing conference, which I guess was 2018, where they would look at CFD to go and screen different equipment configurations. And then when they got the right one, they said, “this is the one that we’re going to run the confirmatory experiments on”

John Thomas: Yeah.

Aaron Sarafinas: So again, they go hand in hand.

John Thomas: Yeah, I like that. I like that. Now, in your practice as an independent consultant, are you doing simulations to augment your analysis?

Aaron Sarafinas: Yes. And one of my specialties is helping clients sufficiently figure out if mixing influences their process.

John Thomas: Mhmm.

Aaron Sarafinas: OK. And one of the ways that, you know, I do this is through an experimental method called the Bourne Protocol. I mean, I’ve been talking about this for ten years, more than ten years now, about the Bourne Protocol, and really, it’s a systematic way that we can look at micromixing and mesomixing effects, mixing at the molecular level and mixing at the level where the feed plume comes in.

John Thomas: I just got to say I hear about it all the time. Either you’re evangelizing it very well, but from the corners of the globe, people want to talk to you about meso- and micromixing. And I think I’m seeing why, because you’re being very evangelical.

Aaron Sarafinas: And the thing of it is it’s a simple experimental protocol.

John Thomas: Yeah.

Aaron Sarafinas: Where I’m telling people to do that—they say, “Oh, Aaron, I got a mixing sensitive process” and I say, “Well, what happens when you change the agitators?”

Aaron Sarafinas: “Oh, I never change the agitator speed because it’s mixing sensitive.” Well, how do you know?

John Thomas: Yeah.

Aaron Sarafinas: Right? And so this is just a systematic way of change the impeller speed and feed rate and feed location to be able to look at the process response. It’s inherently experimental. And what I’ve been trying to do is trying to make it less empirical. Okay?

John Thomas: Okay, okay.

Aaron Sarafinas: And so what I’ve done is taken this protocol and the factors and the responses, and I put them in a scalable micromixing, mesomixing operating space that there’s got to be a more fancy way to say that’s not nearly as long, and so in doing that, I allow people to see regions where their process succeeds and where their process fails.

John Thomas: Mm. Got it.

Aaron Sarafinas: And you avoid the bad neighborhoods and you go to the good ones, okay, in terms of your process – and it’s scalable. And so the thing of it is, this transformation between their factors and this operating space means I need to know the energy dissipation locally.

John Thomas: Mm-Hmm.

Aaron Sarafinas: And I need to know the velocity profiles.

John Thomas: Yeah.

Aaron Sarafinas: And I tell you, there are studies in the literature and there are some rules of thumb. But my experience is that, you know, M-Star is quite useful in determining the profiles that then I can use to get a more reliable transformation into this operating space.

John Thomas: Well, now you’re making me blush, but thank you. I’m glad you’re finding utility out of M-Star.

Aaron Sarafinas: And so the thing of it is I’ve been saying we’ve needed more reliable approaches to the energy dissipation profiles and velocity profiles. Very good paper by Bristol-Myers Squibb at I guess the 2020 AIChE annual meeting where they used M-Star to go and characterize the distribution and got a more reliable characterization in this operating space.

John Thomas: Yeah.

Aaron Sarafinas: So it’s actually again, a very complementary tool that then I can go and say, here’s a scalable way that we can map out your process and give clients more confidence and scale. I mean, just this week, I had a client come to me and say, a longtime client, come to me and say, “Oh, we want to scale this and we want to do this volume change.” And I said, “Well, let me look at it using M-Star.”

John Thomas: Mm hmm.

Aaron Sarafinas: And what I observed was there was quite a difference in the energy dissipation profiles, which influenced the way we would go and set the operating factors of the production scale.

John Thomas: Yeah, yeah.

Aaron Sarafinas: And it also indicated some experiments that we would want to do…

John Thomas: That’s interesting.

Aaron Sarafinas: … and confirm that, you know, it could be that both these conditions are in a region where the process is stable. Well, guess what? Run the experiment.

John Thomas: Yeah.

Aaron Sarafinas: You see, one of the issues in why experiments are going to be essential is, I can’t necessarily efficiently quantify all of the physics and chemistry to go into the models.

John Thomas: Yeah, that’s true.

Aaron Sarafinas: I can do that for some systems. Some systems are well characterized, but other systems I need to just say, “we need to run the experiments.”

John Thomas: Yeah.

Aaron Sarafinas: You learn a lot when the experiment doesn’t work, that’s when we actually learn something. It’s nice to get the validation that, “oh yes, it work just fine,” but we have the opportunity to learn something when the experiment fails or it doesn’t give us the result we expect, and that’s where we can dig deeper. And that’s where a tool like CFD can be helpful.

John Thomas: That’s great. Aaron, this has been fantastic. This has been a great kind of multi-decade overview of applications, and not many people could command that kind of perspective as it were. So I appreciate that. Any last thoughts, any kind of guidance? This podcast is called the CFD Mixtape. If you had to put one little pill into the minds of a bunch of CFD practitioners from someone that’s seen as a big tent chemical engineering guy, what would that takeaway message be?

Aaron Sarafinas: A few things. Models require validation. Okay. And so make sure that, you know, when people develop a model, they’re working in a range where they select parameters that they think makes sense relative to what has been validated or could be validated.

The other piece of it is, and it comes back to when I was leaving Dow and starting my business, and I was trying to figure out what do I call my company? OK, do I call it mixing and process consulting or process and mixing consulting? And one of the engineers who was working with me at the time looked at me in a very matter of fact sort of way, she said, “Process always comes first.”

John Thomas: That’s stark, yeah.

Aaron Sarafinas: And so we need to think about the process because good mixing is defined by what it does in our particular process. So look at the process first and build the model that’s appropriate to describe the type of phenomena that you need to understand in the process.

John Thomas: That’s brilliant. And I have in my mind this mental picture of you tossing and turning in bed.

Aaron Sarafinas: [Laughs]

John Thomas: Just, I can’t, I can’t decide on this name. And then just like it dawns on you. But maybe I’m being overly dramatic.

Aaron Sarafinas: It didn’t dawn on me; I was told. And when I was told, it’s like, the answer was so obvious. Yeah, you know, and that’s the other piece of this, too, is process development is inherently collaborative.

John Thomas: Yeah.

Aaron Sarafinas: And the same thing goes with model building.

John Thomas: Yeah.

Aaron Sarafinas: OK. And so the higher degree of dialogue, the higher degree of interaction between the people doing the modeling work and the people with the problem or with the data – that’s where you arrive at a better solution.

John Thomas: Agreed, communication. I would like to bundle up communication with experimentalists. Brilliant. Aaron, this wsa great. I really appreciate you taking time to join us on today’s episode.

Aaron Sarafinas: This was fun. I enjoyed it.

John Thomas: For listeners, Aaron’s, contact information is down in the description below. Thanks again for being here, Aaron, and thanks to everybody for tuning in to this week’s podcast.

Get in touch with Aaron Sarafinas at [email protected] or visit Sarafinas Process & Mixing Consulting LLC.

About M-Star

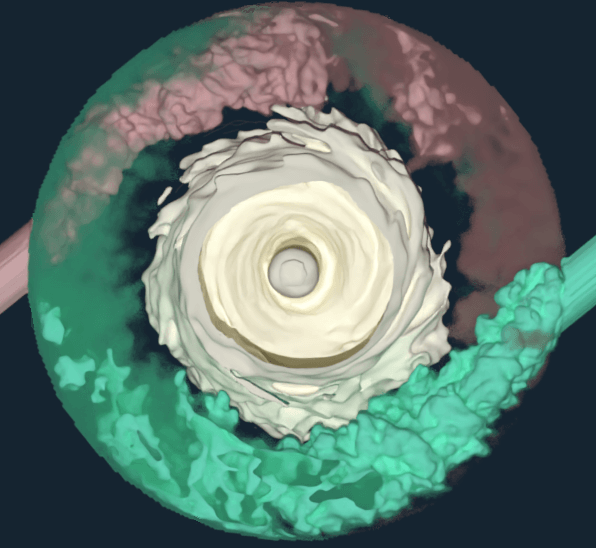

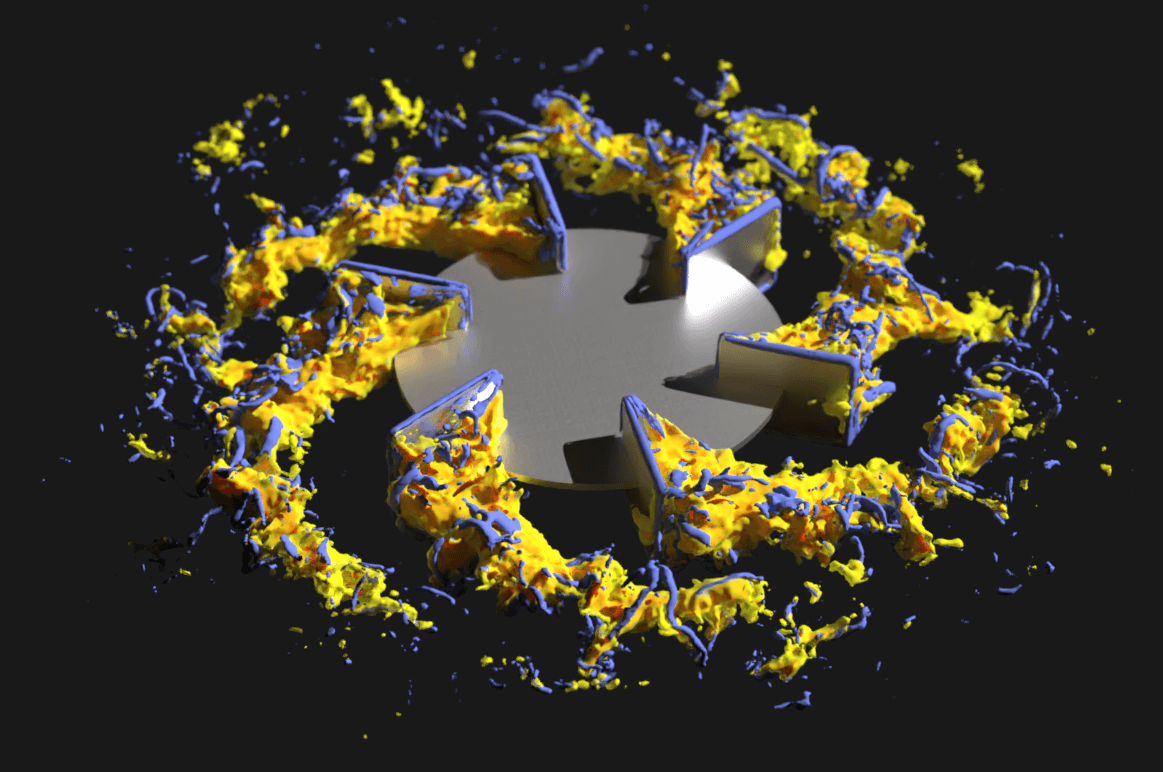

M-Star Simulations, LLC, is a software company focused on the development of computational tools for modeling momentum, energy and mass transport within engineering and biological systems. By pairing modern algorithms with graphical processing card (GPU) architectures, this software enables users to quickly perform calculations with predictive fidelity that rivals physical experiment. These outcomes are achieved using a simple graphical interface that requires minimal user specification and setup times. Founded in Maryland in 2014, M-Star Simulations has grown to include commercial, government and academic users across North America, South America, Europe and Asia.

Explore M-Star’s website here and follow us on LinkedIn here.

Explore the Scientific R&D Software

Explore the Scientific R&D Software